Class 6: The Bigger Picture#

Guest speaker#

Riya Singh#

Riya Singh is an agile data leader, public health researcher and product manager dedicated to designing, developing and managing context-appropriate data products for low-resource populations. As the Director of Data & Analytics of Dimagi’s US Health Division, Riya oversees technical, product and business strategy of various data offerings and serves as a strategic advisor to their partners. Previously, Riya has led large-scale data technology implementations in the US, and India at local, state and federal levels. Riya has a background in public health research and consulting and studied Cognitive Science and Human Rights at UC Berkeley.

Questions?#

Final Project peer grading#

Ask Me Anything (AMA)#

Have slides on “Python beyond data analysis” as backup, but would rather talk about what you want to hear about.

Python beyond data analysis#

We’ve been focusing on using Python and pandas for data analysis. What else is Python used for?

Data engineering#

Automation / recurring processes

Copying/moving/processing/publishing data, especially Big Data

Monitoring/alerting

Web development#

Building web sites that are interactive (more than just content)

Forms

Presenting data

Workflows, such as:

Signing up for things

Paying for things

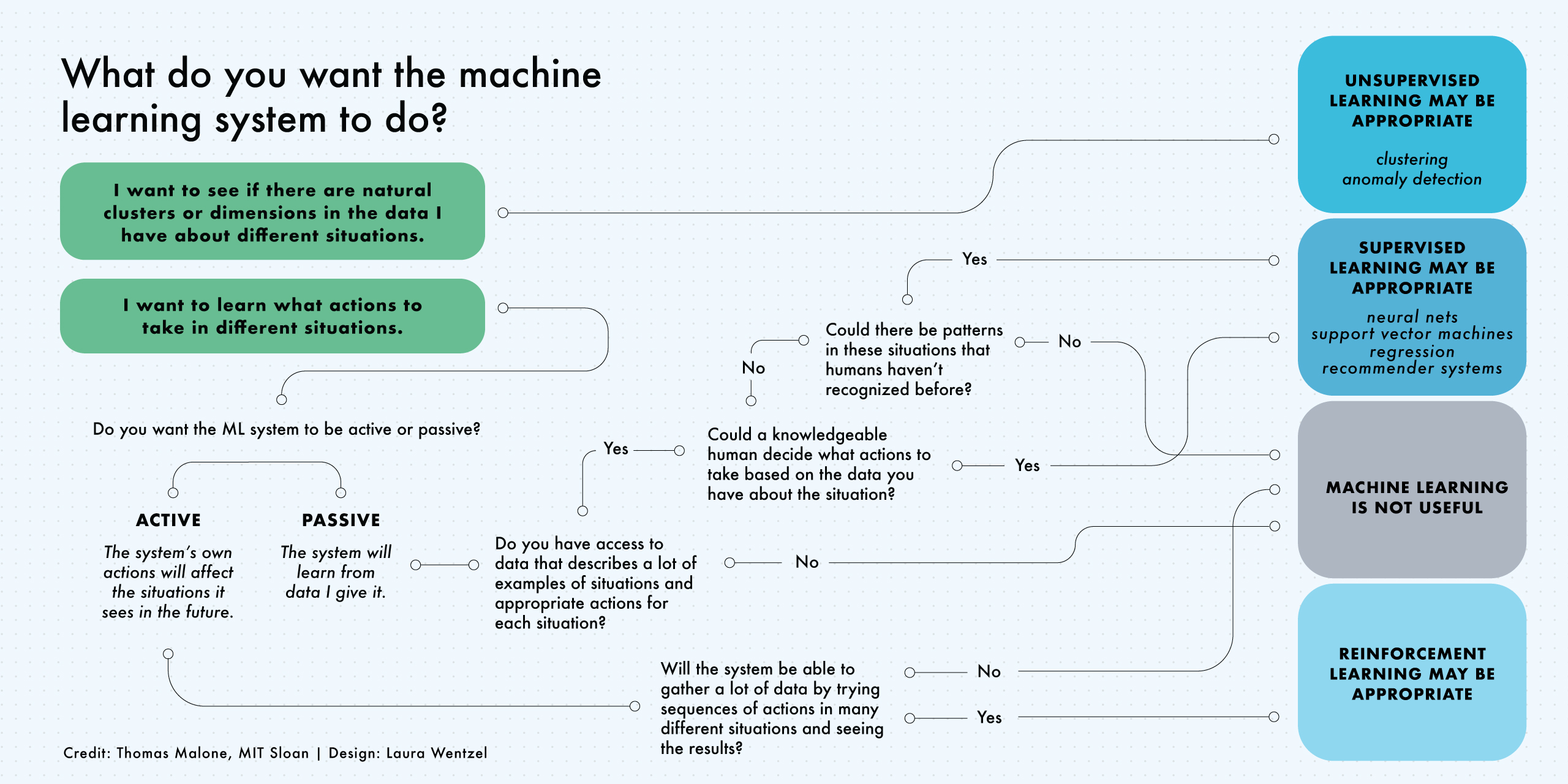

Machine learning#

Statistics, but fancy

Building models

Finding patterns

Recommendations

Detection

When people say “artificial intelligence,” they usually mean “machine learning.”

Source, with more thorough explanation

The process#

High-level

Create a model

Gather a bunch of data for training

If supervised machine learning, label it (give it the right answers)

Segment into training and test data

Train the model against the training dataset (have it identify patterns)

Test the model against the test dataset

Run against new data

If reinforcement learning, model refines itself

You have a head start: The fundamentals are applicable anywhere you’re using code.

Thanks to the Reader!

Thank you!#

Keep in touch.